#CS250#Information#Computer-Science

- Floating point mimics scientific notation

-

- where a = significant digits

- b = order of magnitude

- r = radix or base

- Example

- Floating point is very important

- Used for high value computations

- Floating point processors used to be an entirely separate component

- Floating point representation has more variation

- You need to know precision and range,

- also two plus or minus signs, so store with sign magnitude or with 2’s complement?

- How many range bits and precision bits do we choose?

- If each computer uses a different floating point interpretation, then we can’t move software from one to another very easily!

- Software is near non-portable due to casting issues

- To solve these issues, a standardized floating point representation was made

IEEE Floating Point Representation

- Floating point format specified at a bit string level

- Has rounded results, and even has exceptions/error codes for better error handling

- Floating point software and data can now run unmodified on a lot of computers

- One major issue, addition of floating point numbers is not associative

- Range of values for floating point numbers: about

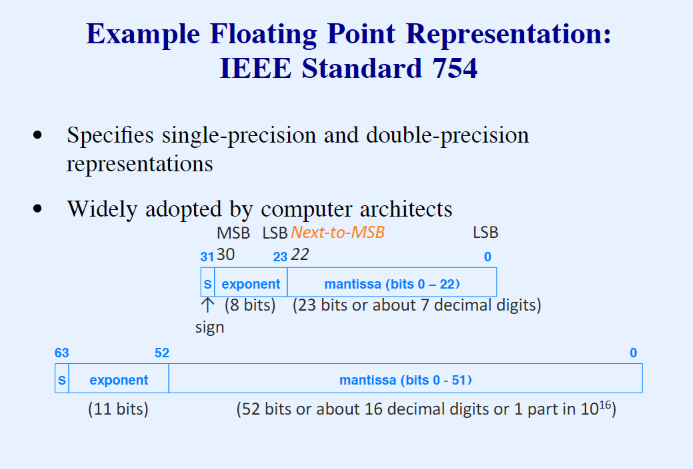

Format basics

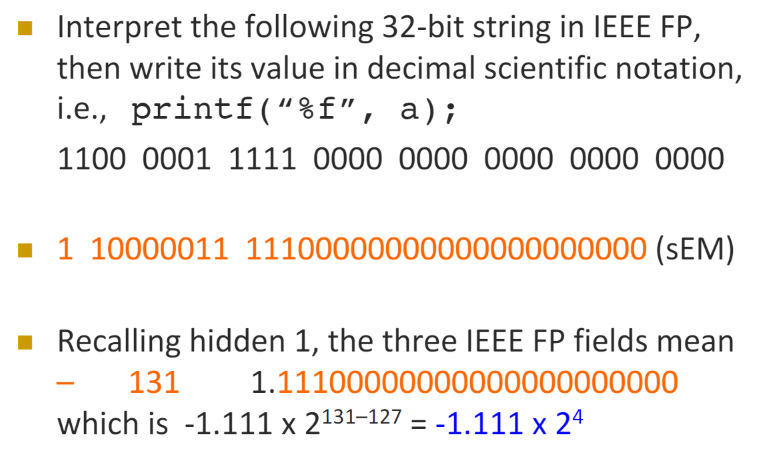

- Sign | Biased Exponent | Mantissa (with a hidden 1 as the most significant bit)

- Also can be written as s(ign)|E(xponent)|M(antissa) - s|E|M

- Sign field pairs with the mantissa

- 0 is positive, 1 is negative

- Exponent representation is a biased Unsigned Integer

- Range of an 8 bit integer exponent is -127 to 127

- We represent that exponent as itself + a bias value of 127.

- Now the range is , the sign of the exponent is implicit

- Biased exponents let us interpret the whole thing as one sign magnitude representation for easy comparison

- Mantissa values are normalized

- In binary the value must be 1. … .. ..

- Because that first value is always a 1, then we don’t need to store that bit. We can just add it in with the circuit itself and strip it out when storing

- Extra precision at no memory cost!

Floating Point Error Handling

- If the sign is 0 or 1, and everything else is 0, then it encodes plus or minus 0.

- When the Exponent is 0 and the mantissa bits are not 0 EXCEPT the MSB is 0, then that encodes a denormalized number

- This enables gradual underflow reducing total underflow noise

- Sign of 0/1, Exponent of 255, and Mantissa bits of 0 encodes plus or minus infinity

- Plus or minus overflow is set to plus or minus infinity, allowing you to respond to overflow errors

- Exponent of 255 and mantissa bits of not all 0’s encodes Not a Number (NaN)

Rounding

- They built in rounding in a way that also retains precision

- Inaccurate rounding injects noise (error) into a computation

- Rounding modes in IEEE Floating Point Standard

- Round to nearest

- As if the computation was done with infinite precision

- Default rounding mode for standard

- Round towards positive/negative infinity

- Round towards 0

- Truncation

- Very noisy form of rounding

- Kept purely out of compatibility really

- Round to nearest

Interpreting Floating Point