Uncertainty

- General situation:

- Observed variables (evidence): Agent knows certain things about the state of the world

- Unobserved variables: Agent needs to reason about other aspects

- Model: Agent knows something about how the known variables relate to the unknown variables Probabilistic Reasoning gives us a framework for managing our beliefs and knowledge

Probabilities

- Probability Space ()

- Sample space Possible outcomes

- F = Events = a set of subsets of

- An event is a subset of

- P = Probability measure on F

- P(A) = probability of event A

- Event A = Getting 1 =

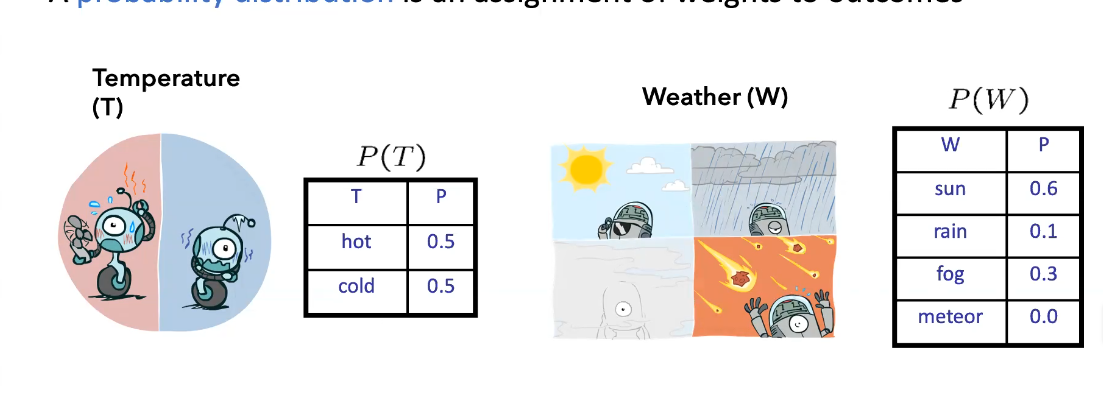

Random variables

A random variable is a function that maps from to some range (set of values it can take on)

- C = Head or Tail?

- T = is te temperature hot or cold

- D = the sum of two dice

- We denote random variables with capital letters

- Example random vairables have range:

- C in {true, false}

- T in {hot, cold}

- D in {2, … 12}

Probability Distributions

- A probability distribution is an assignment of weights to outcomes

- and

- The expected value of a random variable is the average, weighted by the probability distribution over outcomes

- Example: How long to get to the airport?

- Time 65 min 75 min 90 min

- P(T) 0.25 0.5 0.25

- Multiply Time by its P(T) and add together, you get ~76.25 min

What are Probabilities?

- Objectivist/frequentist answe:

- Averages over repeated experiments (E.g. empirically estimating P(rain) from historical observation)

- Assertion about how future experiments will go

- New evidence changes the reference class

- Makes one think of inherently random events, like rolling dice

- Subjectivist/Bayesian answer

- What happens if its not something that’s not easily repetitive? i.e. Probability you are reading this right now

- Degrees of belief about unobserved variables (e.g. an agents belief that its raining, given the temperature. Or Pacman’s belief that the ghost will turn left given the current state)

- Often these probabilities are learnt from past experiences

- New evidence updates your beliefs

- MOST machine learning and AI things take the Bayesian approach

Joint Distributions

- A joint distribution over a set of random variables:

- Size of distribution for a set of variables with range sizes ?

- For all but the smallest distributions its impractical to write out

- An events is a set of outcomes

- From a joint distribution, we can calculate the probability of any event

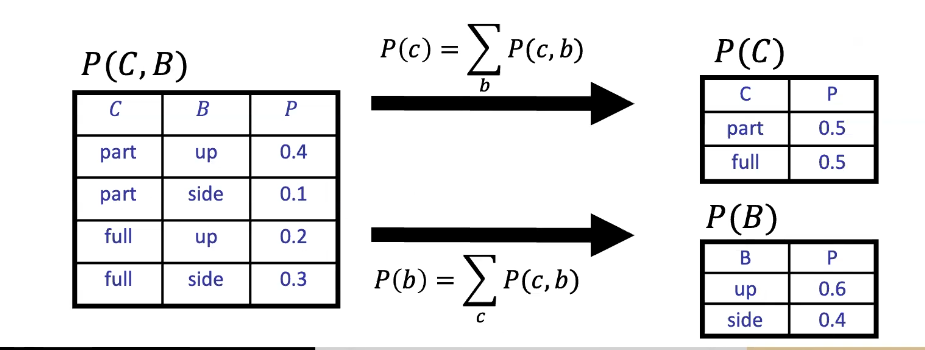

Marginal Distributions

- Marginal distirbutions are sub-tables which eliminate variables

- Marginalization (summing out): Combine collapsed rows via addition

- These still are valid probability distributions and meet the requirements of being greater than or equal to 0 and adding up to 1

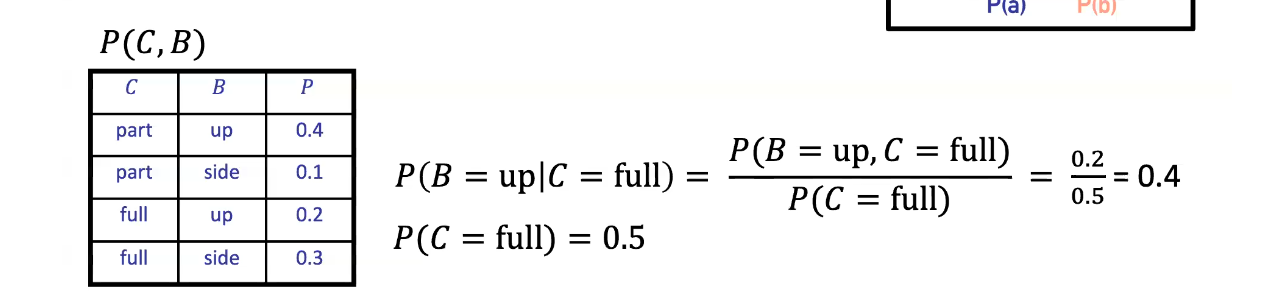

Conditional Probabilities

A simple relation between joint and marginal probabilities

- The mathematical definition

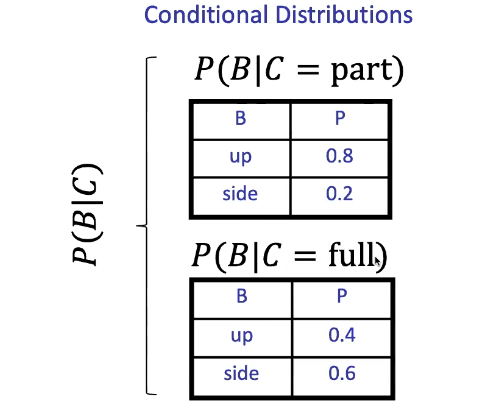

- Conditional distributions are probability distributions over some variables given fixed values of others

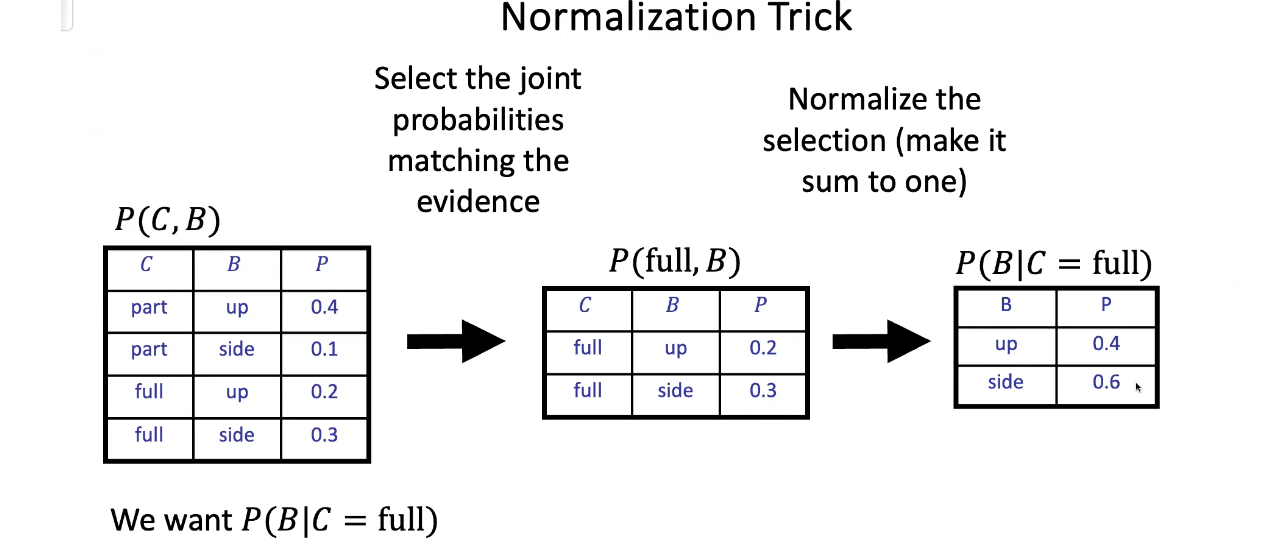

Normalization Trick

We want P(B|C = full)

Independence

Two variables are independent if:

- This says that their joint distribution factors into a product of two simpler distributions

- Another form:

- Independence is a simplifying modeling assumption

- Empirical joint distributions at best are close to independent

- What could we assume for { Weather, Traffic, Cavity, Toothache }?

- Weather and Cavity are probably independent of each other

- Conditional Indpendence

- Unconditional (absolute) indpendence is very rare

- Conditionla independence is our most basic and robust form of knowledge about uncertain environments

- X is conditionlly indepdenet of Y given Z

- The two types of indepdencene do not imply each other

Probabilistic Inference

- Probabilistic inference: compute a desired probability form other known probabilities

- We generally compute conditional probabilities

- P(on time | no reported accidents) = 0.9

- These represent the agents beliefs given the evidence

- Observing new evidence causes beliefs to be updated

- Inference by Enumeration

- General case:

- Evidence variables:

- Query

- Hidden variables

- We want

- Step 1 Select the entries consistent with the evidence

- Step 2 sum out H to get joint of query and evidence

- Step 3 normalize

- General case:

- The Product Rule

- Sometimes hve conditional distributions but we want the joint distribution

- The Chain Rule

- More generally, can always write any joint distribution as an icnremental product of conditional distributions

- Basically a repeated application of the product rule

- Bayes’ Rule

- Two ways to factor a joint distribution over two variables

- Dividing P(y) we get

- $$$P(x|y) = \frac{P(y|x)}{P(y)} P(x)$$